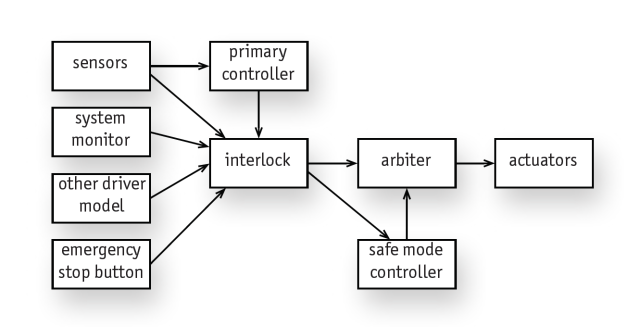

An architecture is proposed to mitigate the risks of autonomous driving. To compensate for the risk of failure in complex components that involve planning and learning (and other functions whose reliability cannot be assured), a small number of trusted components are inserted as an interlock to oversee the behavior of the rest of the system. When incipient failure is detected, the interlock switches the system to a safe mode in which control reverts to a conservative regime to prevent an accident. The research will build on research in assurance cases from the field of software engineering and in probabilistic model checking from computer-aided verification.

Certified control is a new architecture for autonomous cars that offers the possibility of a small, verifiable trusted base without preventing the use of complex machine-learning algorithms for perception and control.

The key idea is to exploit the classic gap between the high cost of finding a solution to a problem and the much lower cost of checking that solution. The main controller plays the role of the solver, analyzing the scene and determining an appropriate next step, and the certifier plays the role of the checker, ensuring that the proposed step is safe.

To make this check possible, the main controller constructs a certificate that captures its analysis of the situation along with the proposed action. The main controller is thus excluded from the trusted base: when it works correctly, the certifier endorses its commands; and when it fails, the certifier will reject the commands and a simpler controller will bring the car to a safe stop. We have designed an architecture that embodies this idea, and demonstrated it in simulation and in a racecar.

So far, we have explored two examples of complex solving. The first involves finding lane lines using visual analysis. In this case, the certificate includes a signed camera image and a mathematical specification of purported lane lines. The checker ensures that the lane lines obey the standard conventions (ie, being parallel and the right distance apart), and that they match the markings on the road, as given in the camera image. We have tested this approach on sample videos from the Open Pilot project, and shown that we are able to catch cases in which lane detection produces bad results.

The second involves filtering LiDAR data to remove spurious reflections from snow. The main controller applies an outlier-detection algorithm to remove points from the LiDAR cloud that correspond to snowflakes, and selects from what remains a set of points that cover the lane ahead with enough density to ensure that no obstacle larger than a certain size can be present. We have demonstrated this approach using a 3D Velodyne LiDAR mounted on our racecar, and have shown that the certifier correctly allows the case in which the car faces simulated snow, but rejects a certificate in which the filtering removes obstacles that are too large (such as some cables dangling in front of the car). More info: http://sdg.csail.mit.edu.ezproxy.canberra.edu.au/projects/interlock

[June-1-2018 to current]

Publications:

- D. Jackson, J. DeCastro, S. Kong, D. Koutentakis, A. Ping, A. Solar-Lezama, M. Wang, and X. Zhang, “Certified Control for Self-Driving Cars,” presented at the 4th Workshop on the Design and Analysis of Robust Systems, 2019 [Online]. Available: https://sites.google.com/view/dars2019/home?authuser=1